更新: 升级到v.0.1.1, 由于爬约400个单词后会冒出人机验证, v.0.1.1加了限流:100/900s (每小时400访问)。可自行用 .env 定制限流:

# .env

SCRAPER_CALLS=50

SCRAPER_PERIOD=800

整了个 pypi 包(我自己有相关需要),不知道有没人用得上。

安装或升级:pip install scrape-glosbe-dict -U

使用:

- scrape-glosbe-dict 词头文件 # 一行一个单词或词组, 英-中

- scrape-glosbe-dict 词头文件 -f de # 德-中

帮助 scrape-glosbe-dict --help

开源:https://github.com/ffreemt/scrape-glosbe-dict/

欢迎使用反馈。

3 个赞

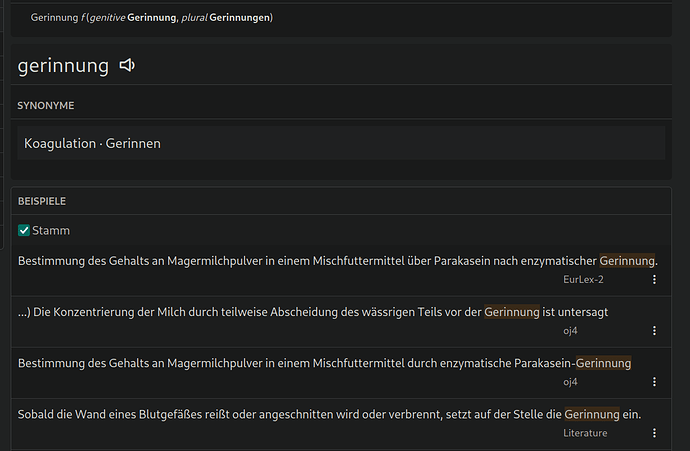

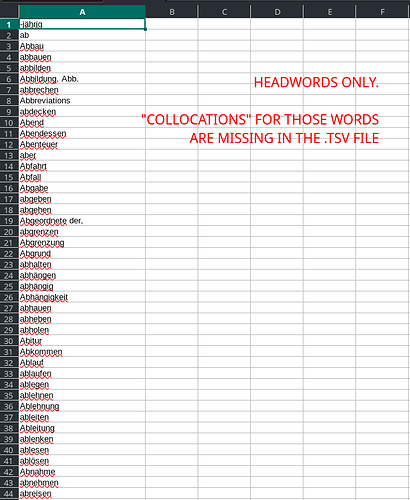

I want to scrape the “Collocations” (usage examples) but the script fails to download them.

I want the German-German results and the script succesfully runs (Manjaro Linux) but the .tsv file only shows the headwords and not the “Collocations”.

Is this script only usefult to grab translations and not the “Collocations” ?

How use it?

e.g. German to Portuguese?

python -m scrape_glosbe_dict [OPTIONS] head-word-file

[OPTIONS]?

Thanks a lot.

Well, based on https://glosbe.com/de/pt/machen you’d have

python -m scrape_glosbe_dict -f de -t pt head-word-file

or in more verbose format

python -m scrape_glosbe_dict --from-lang de --to-lang pt head-word-file

Don’t get your hopes too high though. The website asks for a “Human test” after about 400 words, meaning it would be a sloooooooow process for big head-word-files.

Perhaps try a .env file containing (there are 86400 seconds in one day  )

)

SCRAPER_CALLS=400

SCRAPER_PERIOD=86400

1 个赞

scrape-glosbe-dict only extracts two parts (translations and less-frequent-translations) from glosbe’s html page. Collocations can also be extracted. Just find (e.g. via devtools) the corresponding css-selector or xpath. scrape-glosbe-dict makes use of pyquery (https://github.com/ffreemt/scrape-glosbe-dict/blob/main/scrape_glosbe_dict/scrape_glosbe_dict.py). Other packages (e.g., lxml or event bs4) can also be used.

2 个赞

Thanks a lot.

I will try…

1 个赞